Back to FabImage Library website

You are here: Start » Function Reference » Data Classification » Multilayer Perceptron » MLP_Init

MLP_Init

| Header: | FIL.h |

|---|---|

| Namespace: | fil |

| Module: | FoundationPro |

Creates multilayer perceptron model.

Syntax

C++

C#

void fil::MLP_Init ( ftl::Optional<const ftl::Array<int>&> inHiddenLayers, fil::ActivationFunction::Type inActivationFunction, fil::MlpPreprocessing::Type inPreprocessing, ftl::Optional<int> inRandomSeed, int inInputCount, int inOutputCount, fil::MlpModel& outMlpModel )

Parameters

| Name | Type | Range | Default | Description | |

|---|---|---|---|---|---|

|

inHiddenLayers | Optional<const Array<int>&> | NIL | Internal structure of MLP network | |

|

inActivationFunction | ActivationFunction::Type | Type of activation function used to calculate neural response | ||

|

inPreprocessing | MlpPreprocessing::Type | Method of processing input data before learning | ||

|

inRandomSeed | Optional<int> | 0 -  |

NIL | Number used as starting random seed |

|

inInputCount | int | 1 -  |

1 | MLP network input count |

|

inOutputCount | int | 1 -  |

1 | MLP network output count |

|

outMlpModel | MlpModel& | Initialized MlpModel |

Description

Filter initializes and sets structure of the MlpModel.

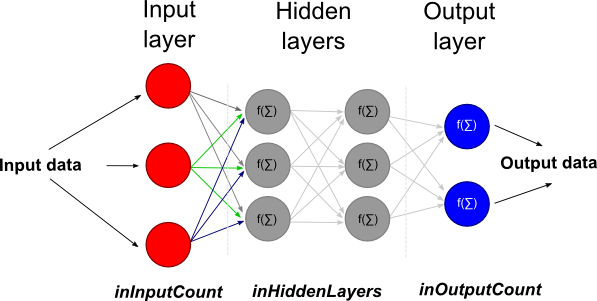

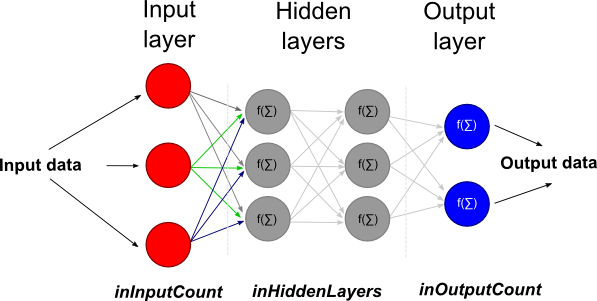

Image: Internal structure of MlpModel. Function f denotes the inActivationFunction.

Parameter inHiddenLayers represents number of neurons in consecutive hidden layers.

The parameter inActivationFunction is a function used to calculate internal neuron activation.

The weights of the multilayer perceptron are initialized by a random numbers. Their values depend on inRandomSeed value.

Parameters inInputCount and inOutputCount defines network inputs and outputs count.

See Also

- MLP_Train – Creates and trains multilayer perceptron classifier.

- MLP_Respond – Calculates multilayer perceptron answer.